Creating Multi-touch Software

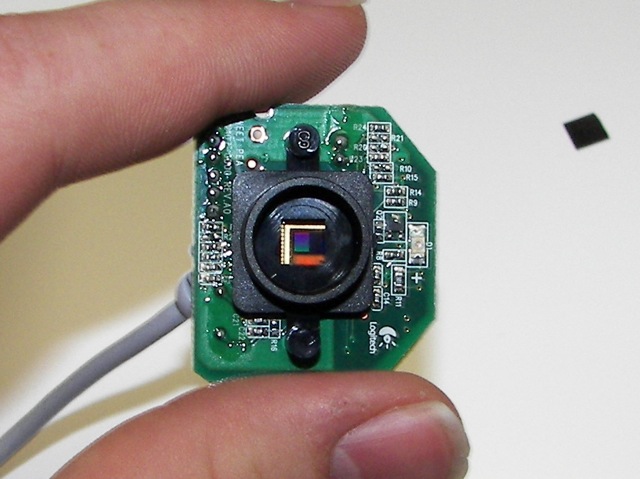

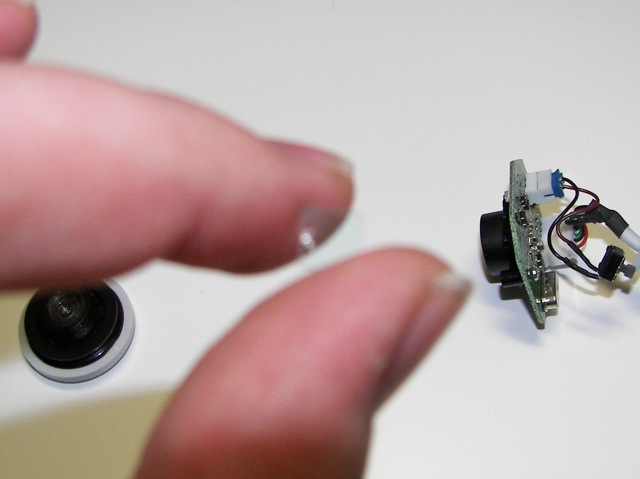

There are several components to a multi-touch software system. First, there must be a program to detect the blobs of light from the IR camera and relay the information about the touches to other programs. Then there must be a way to receive that touch information and interpret it into events such as a click, drag, pinch, or rotation.

I do not know much about image processing, so I was very glad to find there was a solution for OS X already out there. OpenTouch is an application written for Google Summer of Code 2007 by PawelSolyga that detects blobs of light from a camera input, and transmits the touch information by sending network messages to the localhost. Although OpenTouch isn't as mature as TouchLib, its Windows-only brother, it was far enough along that I used it.

Both OpenTouch and TouchLib send the touch data to other applications by sending Tangible User Interface Object (TUIO) network messages. TUIO is a protocol that is designed for transmitting the state of multi-touch systems. TUIO is built upon another protocol, Open Sound Control (OSC). While libraries for receiving TUIO messages are available in a few languages such as C++ or Java, there was not a solution for Cocoa applications. My first step was to build a library for receiving TUIO messages in Cocoa.

Because TUIO is built upon OSC, I looked for a library that could parse OSC messages. Unfortunately, I could not find one that would fill all my needs. WSOSC was a library that came close though. There were a few issues to work around (use NSData instead of NSString), but eventually I was able to use WSOSC to parse the OSC packets. When finished, my framework had the ability to parse TUIO messages and had a method to delegate the TUIOCursor objects it created to another application.

For the user interface I started by subclassing Core Animation's basic component, CALayer. I added methods to handle TUIOCursor objects, and automatically scale, rotate, or change position. I prototyped a few variants of this class to make objects that could contain pictures or video clips when dragged onto the screen from Finder. However, dragging on each individual file was an inconvenient way to get media onto my application. I needed a way to access media very quickly.

An image browser is an obvious application for multi-touch devices. However, I didn't want pictures of other people's kids all over my multi-touch table (not to mention that would break science fair rules). Instead, I decided to make an application to browse my favorite comics, xkcd. XKCD comics are released under the creative commons license, so I wouldn't need to worry about breaking copyright law either. As icing on the cake, there were transcriptions of most xkcd comics on OhNoRobot.com, which make searching for the comics very possible. I quickly whipped up a program in Revolution to download the comics and store their associated transcripts in an SQLite database. As an added feature, I also added the ability to tag the comics, so I could mark the comics that use profanity and choose not to show those for science fair. (This program is available for download for Windows and Mac if you want to try it out)

Once I had all the comics and transcripts, creating an application that could search for and display these comics in multi-touch enabled Core Animation layers was only a few solid nights of coding away.